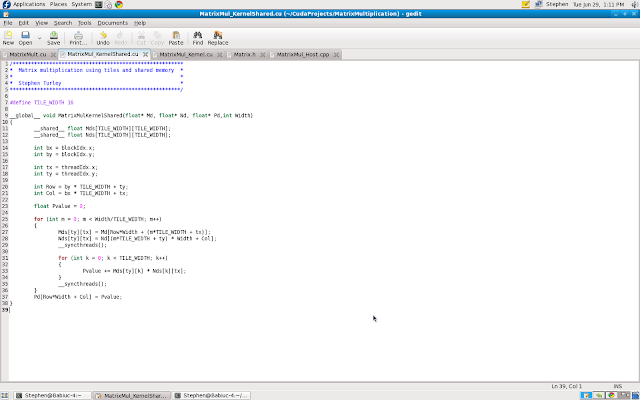

Let's look at the code:

You will notice at the top that I allocate two spaces in memory with the designation __shared__. This is how I tell CUDA that I want these variable stored in shared memory. You may be wondering why all the memory isn't shared if it is quicker. Well it is all about scope. The scope of global memory is device, meaning that every thread in the grid can access it at any time. When I store an entire matrix into the global memory and have thousands of threads accessing it, it creates quite a bottle neck. The scope of shared memory however, is block. This means that every thread in a block can access that blocks shared memory. It cannot, however, access another blocks shared memory. This keeps latency low and speed up. It also represents the physical layout of the memory cells on the chip.... but that is another story.

In order to stay withing the limiting size of shared memory I had to partition the matrix into what are called tiles. I made each tile 16X16 which is the same size of my blocks. Take a look at the code again and pay attention to the first for loop. Here each thread is responsible for loading an element into the shared sub-matrix. The following loop completes the calculation and the loop exits. The elements are then loaded back into global memory and the process is complete... well sort of.

The tricky part is realizing that we are executing 64 squared blocks of 16 squared threads. I have no way of controlling when the CUDA device will run each block, so I may have block 15,16 running first and block 1,65 running later and so on and so forth. Also one thread may take a little longer to execute than another. This is where the function __synchthreads(); comes in to play. This function is built into the metal so to speak. This just means that it is hardware instruction that tells all the threads in the block to wait until everybody is ready to move on to the next step. This is called a barrier synchronization.

Think of a barrier synchronization like kids getting on a bus. There may be 100 children running around screaming trying to find the correct bus to get them home. The children are like the threads... they know what they need to do but nobody knows when they will do it. So the bus driver must wait until everyone is on board before she departs. This is the barrier synchronization, the bus driver MUST wait for everyone. If she left early I'm sure there would be some very unhappy parents.

In the case of matrix multiplication, since every element must be loaded from the two input matrices for the threads to do their computations, I call __syncthreads() at the end of each element being loaded, and it makes the bus driver wait until the kids are on board.... or the threads are completed loading.

So all of this confusing tiling stuff must have done something. Check out this photo from the Nvidia CUDA visual profiler:

The bar graph illustrates clock cycles. The taller the bar, the longer it takes. Ignore the red and green bars and lets focus on the blue and yellow. The red and green are just memory moving around. The blue bar is my original kernel and the yellow is the new tiled/shared kernel. They do the exact same calculation but the shared kernel does it about 7 times faster! Pretty cool ehh?

Thanks for reading!

No comments:

Post a Comment